GRPO - Group Relative Policy Optimization: How DeepSeek Trains Reasoning Models

Discover how GRPO, an innovative reinforcement learning technique, enables efficient training of compact AI models that rival industry giants in reasoning tasks.

The field of AI reasoning capabilities has seen remarkable advancements recently, with DeepSeek's models demonstrating impressive reasoning skills while using fewer parameters than competitors.

Behind these achievements lies an innovative reinforcement learning technique called Group Relative Policy Optimization (GRPO). This method revolutionizes how language models are trained for reasoning tasks, offering both performance improvements and computational efficiency.

The Challenge of Training Reasoning Models

Training language models to reason effectively—to solve math problems, write code, or work through logical puzzles step-by-step—has traditionally been difficult. Models might provide quick answers without showing their reasoning process, or they might follow flawed logic yet somehow reach correct conclusions.

The standard approach has been supervised fine-tuning (SFT), where models learn from human-created examples that demonstrate proper reasoning. But this approach has limits:

It's expensive to create high-quality reasoning examples

Models can only learn patterns present in the training data

The quality ceiling is limited by the best human examples

This is where reinforcement learning comes in—specifically, Group Relative Policy Optimization.

What is GRPO?

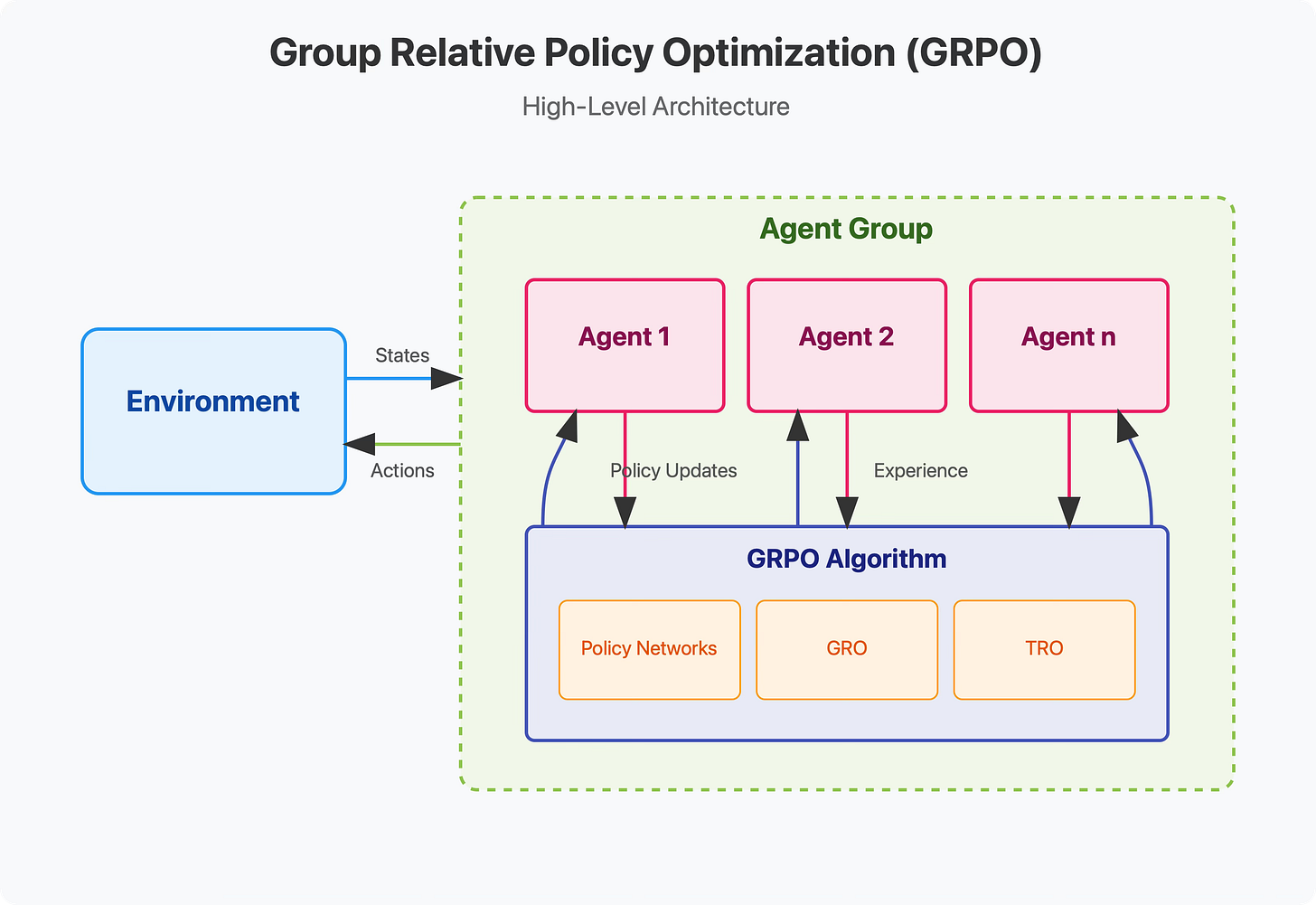

Group Relative Policy Optimization is a reinforcement learning algorithm introduced by DeepSeek researchers to efficiently train large language models for reasoning tasks. It builds upon the popular Proximal Policy Optimization (PPO) algorithm but introduces key innovations that make it more efficient and effective for training reasoning capabilities.

The core insight of GRPO is that we can make models play against themselves in a game-like scenario. Rather than learning from static examples, models generate multiple solutions to problems, evaluate those solutions, and gradually improve their reasoning process.

GRPO is particularly notable for:

Eliminating the need for a separate value function/critic model

Using group-based advantage estimation

Directly incorporating KL divergence into the loss function

Achieving superior results with dramatically lower computational costs

Let's break down how it actually works.

GRPO: The Mechanics

To understand GRPO, let's walk through a simple example of solving an equation:

Problem: Solve for x in the equation 2x + 4 = 10

Now imagine a model generating three different solutions:

Solution 1:

Step 1: Add 4 to both sides

2x + 4 + 4 = 10 + 4

2x + 8 = 14

Step 2: Divide both sides by 2

x + 4 = 7

x = 3

Wait, that's wrong. Let me recalculate.

x = 4

Solution 2:

Step 1: Multiply both sides by 2

4x + 8 = 20

Step 2: Subtract 8 from both sides

4x = 12

x = 3

Solution 3:

Step 1: Subtract 4 from both sides

2x + 4 - 4 = 10 - 4

2x = 6

Step 2: Divide both sides by 2

x = 3

Step 1: Generating a Group of Solutions

The first step in GRPO is to generate multiple solutions to the same problem. Unlike traditional approaches that might generate just one solution per problem, GRPO instructs the model to generate multiple solutions (typically 4-8, but can be scaled up to 64 or more) for each problem.

These solutions can be wildly different in quality—some might be completely wrong, others partially correct, and some perfectly correct. The diversity of solutions gives the model various learning signals.

Step 2: Scoring Each Solution

Next, each solution is scored based on several factors:

Correctness: Did the solution arrive at the right answer?

Step-by-step reasoning: Did the solution show clear work?

Step correctness: Is each individual step mathematically valid?

For our example, we might score them as follows:

Solution 1: Poor (-0.5) – Steps are incorrect and final answer is wrong

Solution 2: Medium (0.5) – Steps are suboptimal but final answer is correct

Solution 3: Good (1.0) – Steps are optimal and final answer is correct

The scoring can be done by rule-based systems, external verification tools, or even the model itself in some cases.

Step 3: Computing the Advantage

Here's where GRPO differs from other RL approaches. Instead of using a separate value function (critic) to estimate expected rewards, GRPO uses the group's average reward as a baseline.

The advantage for each solution is calculated as:

Advantage = Solution Score - Average Group Score

If the average score is 0.33 (the average of -0.5, 0.5, and 1.0), then:

Solution 1: -0.5 - 0.33 = -0.83

Solution 2: 0.5 - 0.33 = 0.17

Solution 3: 1.0 - 0.33 = 0.67

Solutions that score above average receive positive advantages, while those below average receive negative advantages.

Step 4: Policy Optimization

The heart of GRPO is the policy optimization step. The algorithm computes the probability ratio between the new policy (post-update) and the old policy (pre-update) for each solution. This ratio tells us how much the policy has changed.

For example, if the old policy had a 30% chance of generating Solution 3 (the best one) and the new policy has a 70% chance, the ratio would be 2.33 (70%/30%).

GRPO then computes the loss function:

L = E[min(r*A, clip(r, 1-ε, 1+ε)*A) - β*KL(πθ_old || πθ)]Where:

r is the probability ratio

A is the advantage

clip() constrains the ratio to prevent too large updates

KL() is the Kullback-Leibler divergence between old and new policies

β is a hyperparameter that balances exploration and exploitation

This loss function encourages the model to:

Increase the probability of generating good solutions

Decrease the probability of generating bad solutions

Not deviate too much from the previous policy

Step 5: Iteration

The process repeats over many iterations, gradually improving the model's ability to solve problems with clear, step-by-step reasoning.

The GRPO Loss Function Explained

The GRPO loss function might look intimidating, but we can break it down into understandable components:

Where:

G is the number of responses per problem

O_g is the number of steps in response g

r_g,t is the probability ratio for step t of response g

A_g,t is the advantage for step t of response g

ε is the clip parameter (typically 0.2)

β is the KL divergence coefficient

The formula has several key components:

Average over responses: (1/G) * Σ[...]

Average over steps: (1/O_g) * Σ[...]

Clipped objective: min(r*A, clip(r, 1-ε, 1+ε)*A)

KL penalty: β * KL(πθ_old || πθ)

The clipping function prevents extreme policy updates. If the probability ratio exceeds 1+ε (e.g., 1.2) or falls below 1-ε (e.g., 0.8), it gets clipped to those values.

The KL divergence term penalizes large changes to the policy. This is crucial for stability—you don't want the model to dramatically change its behavior based on a limited set of examples.

GRPO in DeepSeek Models

DeepSeek has applied GRPO to train several impressive reasoning models:

DeepSeekMath

DeepSeekMath 7B achieved a 51.7% accuracy on the challenging MATH benchmark without using external tools. This approaches GPT-4's performance despite using significantly fewer parameters.

The training process involved:

Initial supervised fine-tuning on mathematical problems

GRPO training with math-specific reward functions

Further supervised fine-tuning to maintain general capabilities

The model was trained to use a specific format involving <think> and <answer> tags, where <think> contains the step-by-step reasoning and <answer> contains the final result.

DeepSeek-R1

DeepSeek-R1 extended the GRPO approach beyond math to general reasoning tasks including coding, logical puzzles, and analytical problems. The training process involved alternating between:

Supervised fine-tuning on general datasets

GRPO training on synthetic reasoning datasets (approximately 600,000 samples)

Importantly, DeepSeek-R1 rivals OpenAI's o1 model in reasoning tasks while using a more efficient training methodology.

DeepSeek-R1-Zero

Perhaps most impressively, DeepSeek created DeepSeek-R1-Zero, which skipped supervised fine-tuning altogether and used pure reinforcement learning with GRPO. This led to emergent behaviors like self-evaluation and "aha moments" where the model recognizes errors in its reasoning and corrects itself.

Advantages Over PPO

GRPO offers several key advantages over traditional Proximal Policy Optimization:

Memory Efficiency

By eliminating the separate value function model, GRPO reduces memory requirements by 40-60%. This means you can train larger models on the same hardware, or train equivalent models on more modest hardware.

A practical example: While PPO for a 7B model might require high-end GPUs with 40GB+ VRAM, GRPO can work with consumer-grade GPUs with 16GB VRAM.

Computational Cost

GRPO dramatically reduces the computational cost of training. According to DeepSeek's research, GRPO can be up to 18 times more cost-efficient than PPO in certain scenarios.

This means a training run that might cost $10,000 with PPO could potentially be done for around $556 with GRPO—a game-changer for research labs and smaller organizations.

Stability

The group-based advantage estimation provides more stable updates than traditional methods. By comparing each solution to the average of the group, GRPO reduces variance in the advantage estimates.

Better Reasoning

Perhaps most importantly, GRPO seems particularly effective at teaching models to reason. The focus on step-by-step solutions and the ability to compare multiple approaches to the same problem allows models to develop robust reasoning capabilities.

Tools and Frameworks

Several tools can help implement GRPO:

Unsloth: A library that optimizes memory usage for training language models

TRL (Transformer Reinforcement Learning): A library by Hugging Face that supports various RL algorithms including GRPO-like approaches

Custom frameworks: DeepSeek's own implementation or other custom setups

Challenges and Solutions

Reward Hacking

One challenge with RL approaches is "reward hacking," where models find ways to maximize rewards without actually improving the desired behavior.

For example, a model might learn to write convincing-looking steps that aren't mathematically valid but somehow lead to the correct answer.

GRPO addresses this by:

Scoring individual steps, not just the final answer

Using diverse reward functions that capture different aspects of quality

Limiting policy updates to prevent extreme behavior changes

Training Stability

RL training can be unstable, with performance sometimes declining unexpectedly.

GRPO improves stability through:

Group-based advantage estimation

Clipping to prevent extreme updates

KL divergence penalty to maintain proximity to the original policy

Computational Constraints

Even with GRPO's efficiency improvements, training reasoning models remains computationally intensive.

Practical solutions include:

Starting with smaller models (1.5B-7B) before scaling up

Using techniques like LoRA (Low-Rank Adaptation) to reduce trainable parameters

Leveraging distributed training across multiple GPUs

Emergent Behaviors

One of the most fascinating aspects of GRPO training is the emergence of behaviors that weren't explicitly programmed.

Self-Correction

DeepSeek researchers observed models having "aha moments" where they:

Start solving a problem with one approach

Realize the approach is flawed

Explicitly note the error

Switch to a better approach

For example:

<think>

Let's solve for x in 2x + 4 = 10.

First, I'll subtract 4 from both sides:

2x + 4 - 4 = 10 - 4

2x = 6

Now I'll divide both sides by 2:

2x/2 = 6/2

x = 3

Actually, wait. Let me double-check this:

2(3) + 4 = 6 + 4 = 10 ✓

So x = 3 is correct.

</think>

<answer>x = 3</answer>This self-correction behavior emerges naturally from the reward structure—models that correct their mistakes get better rewards than those that persist with errors.

Time Extension

Models trained with GRPO learn to extend their reasoning time when faced with difficult problems. They might:

Break down complex problems into subproblems

Try multiple approaches when uncertain

Verify their answers with additional calculations

This resembles human problem-solving behavior, where we naturally spend more time on difficult problems and verify our work.

GRPO vs Traditional Methods: A Comparison

Let's compare GRPO with other approaches for training reasoning models:

Supervised Fine-tuning (SFT)

SFT:

Uses human-generated examples

Quality limited by training data

Requires extensive high-quality data

Relatively simple training process

GRPO:

Uses self-generated examples with rewards

Can exceed quality of training data

Requires good reward functions

More complex training process

Proximal Policy Optimization (PPO)

PPO:

Uses separate value function (critic)

Higher memory requirements

Typically more expensive to train

Well-established in the field

GRPO:

No separate value function

Lower memory requirements

More cost-efficient

Newer approach with growing adoption

Rejection Sampling

Rejection Sampling:

Generate multiple outputs and pick the best

Simple implementation

No learning between iterations

Works well for inference, not training

GRPO:

Generate multiple outputs and learn from all

More complex implementation

Learning improves over iterations

Specifically designed for training

The Future of GRPO

GRPO represents an important step toward more efficient and effective training of reasoning models. Several trends suggest its increasing importance:

Democratization of RL for Language Models

By reducing computational requirements, GRPO makes reinforcement learning more accessible to smaller organizations and individual researchers. This democratization could accelerate innovation in the field.

Multimodal Reasoning

The principles behind GRPO could extend to multimodal reasoning tasks, where models must reason about text, images, and other modalities together. The group-based approach works regardless of the input type.

Human Feedback Integration

While GRPO can work with algorithmic rewards, it can also incorporate human feedback. Future systems might combine automated evaluation with human judgment to create more nuanced reward signals.

Exercises and Challenges

Want to experiment with GRPO yourself? Here are some challenges to try:

Implement a basic GRPO trainer for a small language model (1-2B parameters) on a simple math dataset like GSM8K.

Design a reward function for code generation that evaluates both correctness and code quality.

Experiment with different group sizes (4, 8, 16, 32) and observe the impact on training stability and performance.

Create a hybrid reward function that combines algorithmic verification with a small language model acting as a judge.

Implement curriculum learning where problems gradually increase in difficulty based on the model's performance.

Conclusion

Group Relative Policy Optimization represents a significant advancement in training language models for reasoning tasks. By eliminating the need for a separate value function, GRPO reduces memory requirements and computational costs while improving model performance.

The approach has enabled DeepSeek to create powerful reasoning models like DeepSeekMath and DeepSeek-R1 that rival much larger models from other organizations. More importantly, GRPO demonstrates how reinforcement learning can be made accessible and efficient for training large language models.

As the field continues to evolve, GRPO and similar techniques will likely play an increasingly important role in teaching AI systems to reason effectively across diverse domains.

Further Reading

DeepSeek-R1 technical report

"Proximal Policy Optimization Algorithms" by Schulman et al.

Unsloth documentation for efficient training

TRL (Transformer Reinforcement Learning) documentation