How the Operating System Manages Blocking I/O

From Thread Blocking to Resumption – Understanding Context Switching, Interrupt Handling, and Scheduling in Modern OS

Efficient management of threads and processes is critical to ensuring optimal CPU utilization and responsiveness. One of the most common scenarios where this efficiency is tested is when a thread or process performs a blocking I/O operation.

This article will explore in detail how the operating system (OS) handles such situations, from the moment a thread blocks on I/O to the point where it is resumed after the I/O operation completes.

1. Understanding Blocking I/O and Thread States

What is Blocking I/O?

Blocking I/O refers to an operation where a thread or process must wait for an external event to complete before it can proceed. Examples include reading from a file, waiting for network data, or writing to a disk. During this waiting period, the thread cannot perform any useful work, so it is said to be "blocked."

Thread States in the OS

To manage threads effectively, the OS categorizes them into different states:

Runnable (Ready): The thread is ready to execute and is waiting for CPU time.

Running: The thread is currently executing on a CPU core.

Blocked (Waiting): The thread is waiting for an external event (e.g., I/O completion) and cannot proceed.

Terminated: The thread has finished execution and is no longer active.

When a thread performs a blocking I/O operation, it transitions from the Running state to the Blocked state. The OS then removes it from the CPU and schedules another thread to run.

2. Context Switching: The Heart of Multitasking

What is a Context Switch?

A context switch is a process by which the OS saves the state of a currently running thread and restores the state of another thread so it can execute. This allows the CPU to switch between threads, ensuring that all tasks get a fair share of CPU time.

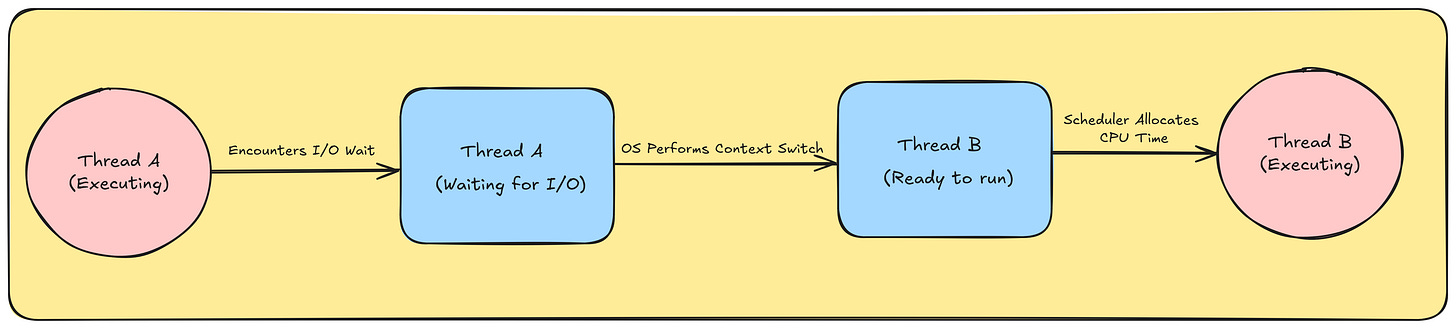

How Context Switching Works During Blocking I/O

Thread A Issues a Blocking I/O Request: Suppose Thread A is running and issues a

read()system call to read data from a file.Thread A Enters the Blocked State: The OS detects that Thread A cannot proceed until the I/O operation completes. It saves Thread A's state (registers, program counter, stack pointer, etc.) and marks it as Blocked.

Thread A is Removed from the CPU: The OS removes Thread A from the CPU and places it in a waiting queue associated with the I/O operation.

Thread B is Scheduled: The OS selects another runnable thread (Thread B) from the ready queue, restores its state, and starts executing it on the CPU.

3. I/O Completion and Interrupt Handling

How the OS Knows When I/O Completes

When the I/O operation completes (e.g., data is read from the disk), the hardware generates an interrupt. An interrupt is a signal to the CPU that an event requiring immediate attention has occurred.

Interrupt Handling Process

Hardware Interrupt: The I/O device (e.g., disk controller) sends an interrupt signal to the CPU.

CPU Saves Current State: The CPU stops executing the current thread (Thread B), saves its state, and jumps to the interrupt handler (a part of the OS kernel).

Interrupt Handler Executes: The interrupt handler identifies the source of the interrupt (e.g., the specific I/O operation) and marks the corresponding thread (Thread A) as Runnable.

Thread A is Added to the Ready Queue: The OS moves Thread A from the blocked queue to the ready queue, making it eligible for scheduling.

4. Rescheduling the Blocked Thread

How the OS Resumes the Blocked Thread

Once the blocked thread (Thread A) is marked as runnable, the OS must decide when to resume its execution. This decision is made by the scheduler, which is responsible for allocating CPU time to threads.

Scheduling Policies

The OS uses various scheduling policies to determine which thread to run next:

Preemptive Multitasking: The OS can interrupt a running thread to give CPU time to another thread.

Priority Scheduling: Threads with higher priority are given preference.

Round-Robin Scheduling: Threads are cyclically given equal time slices.

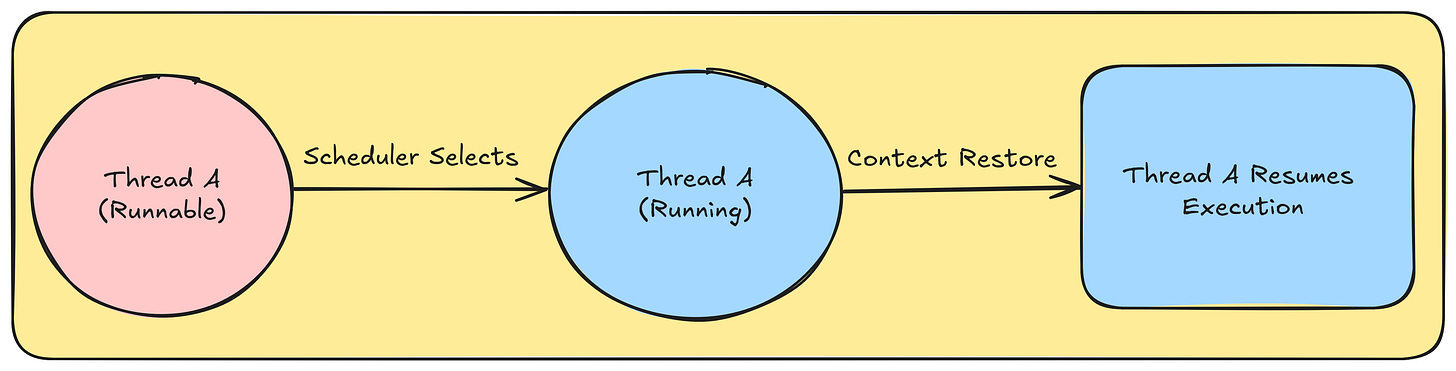

Context Restore and Execution

Scheduler Selects Thread A: The scheduler picks Thread A from the ready queue.

Context Restore: The OS restores Thread A's saved state (registers, program counter, etc.).

Thread A Resumes Execution: Thread A continues execution from the point where it was blocked.

5. The Big Picture: From Blocking to Resumption

Let’s tie everything together with a high-level overview of the entire process:

Thread A Issues a Blocking I/O Request: Thread A is running and requests data from a file.

Thread A Blocks: The OS saves Thread A's state and marks it as blocked.

Context Switch to Thread B: The OS schedules Thread B to run on the CPU.

I/O Operation Completes: The disk controller sends an interrupt to the CPU.

Interrupt Handler Marks Thread A as Runnable: The OS moves Thread A to the ready queue.

Scheduler Resumes Thread A: The OS restores Thread A's state and resumes its execution.

6. Why This Process Matters

Efficient CPU Utilization: By removing blocked threads from the CPU and scheduling other runnable threads, the OS ensures that the CPU is always busy executing useful work. This is critical for maximizing system performance.

Responsiveness: The ability to quickly resume blocked threads once their I/O operations are complete ensures that applications remain responsive. For example, a web server can handle multiple requests simultaneously without waiting for slow I/O operations.

Scalability: This mechanism allows modern operating systems to handle thousands of threads and processes efficiently, making them suitable for high-performance computing and large-scale applications.

7. Conclusion

The operating system’s handling of blocking I/O is a cornerstone of modern multitasking. By leveraging context switching, interrupt handling and efficient scheduling, the OS ensures that blocked threads are seamlessly removed from the CPU and resumed once their I/O operations are complete. This process not only maximizes CPU utilization but also ensures that applications remain responsive and scalable.

Thanks for the article, very interesting man