Understanding "Memory Allocated at Compile Time" in C and C++

The Role of Compile-Time Allocation in C/C++ Performance

Introduction

When working with languages like C and C++, the concepts of memory allocation are fundamental. Programmers often encounter terms like "static allocation" and "dynamic allocation" but one phrase that can be particularly confusing is "memory allocated at compile time." This blog post aims to explain this concept, providing a detailed explanation of what it means, how it works, and why it matters.

Static vs. Dynamic Memory Allocation

Before diving into the specifics of compile-time memory allocation, it's essential to understand the broader context of memory allocation in C and C++. Memory allocation can be broadly categorized into two types:

Static Memory Allocation: Memory is allocated at compile time and is fixed throughout the program's execution.

Dynamic Memory Allocation: Memory is allocated and deallocated during runtime, allowing for more flexibility.

What is Compile-Time Memory Allocation?

The phrase "memory allocated at compile time" refers to the process where the compiler reserves memory for variables and data structures during the compilation phase. This means that the memory addresses for these variables are determined before the program runs, rather than during its execution.

How Does It Work?

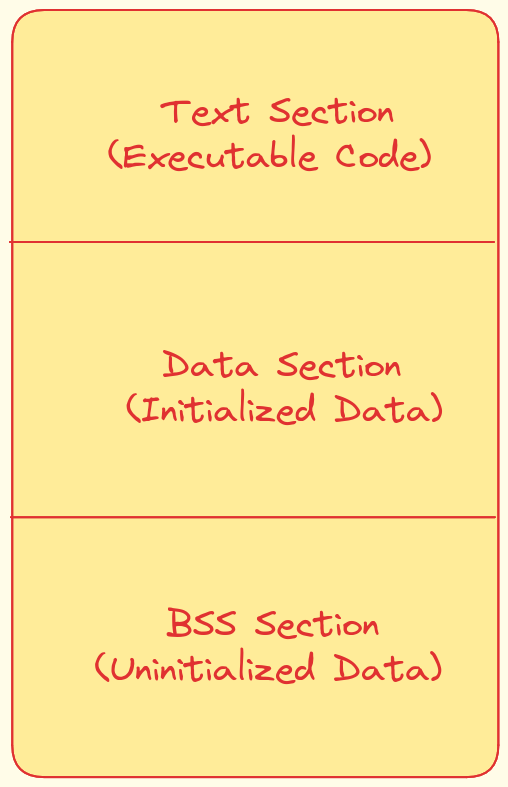

When you write code in C or C++, the compiler converts it into machine code, which is stored in an executable file. This executable file contains not only the machine instructions but also the memory layout of the program. The memory layout includes sections for different types of data, such as:

Text Section: Contains the executable code.

Data Section: Contains initialized global and static variables.

BSS Section: Contains uninitialized global and static variables, which are zero-initialized at runtime.

Example: Global Variables

Consider the following code:

int array[100];Here, array is a global array of 100 integers. The compiler knows the size of int and the size of the array, so it reserves a fixed amount of memory in the BSS section of the executable. The memory address for array is determined at compile time and is fixed for the entire duration of the program's execution.

Example: Initialized Global Variables

Now, consider the following code:

int array[] = {1, 2, 3, 4};In this case, the compiler not only reserves memory array but also includes the initial values in the data section of the executable. The memory array is allocated at compile time, and the initial values are stored in the executable file.

Assembly Code Insight

To better understand how the compiler handles static memory allocation, let's look at the assembly code generated by the compiler. Consider the following C++ code:

int a[4];

int b[] = {1, 2, 3, 4};

int main()

{

return 0;

}When compiled with GCC 12.2 for an x86-64, the assembly code might look like this:

a:

.zero 16

b:

.long 1

.long 2

.long 3

.long 4

main:

push rbp

mov rbp, rsp

mov eax, 0

pop rbp

retIn this assembly code:

.zero 16reserves 16 bytes (4 integers) for arrayain the BSS section..long 1,.long 2, etc., store the initial values for the arraybin the data section.

Virtual Memory and Address Translation

It's important to note that the memory addresses mentioned in the executable are virtual. The operating system (OS) and the memory management unit (MMU) handle the translation of these virtual addresses to physical addresses at runtime. This abstraction allows the program to assume it has its entire address space, simplifying memory management.

Why Does It Matter?

Understanding compile-time memory allocation is crucial for several reasons:

Performance: Static allocation can be faster since the memory is already reserved and initialized at startup.

Predictability: Memory usage is predictable, which is beneficial for systems with limited resources.

Debugging: Knowing the memory layout can help in debugging and understanding program behavior.

Runtime Memory Allocation

In contrast to compile-time allocation, dynamic memory allocation occurs during runtime. Functions like malloc() and new are used to allocate memory dynamically. This allows for more flexibility but comes with the overhead of managing memory manually.

Example: Dynamic Allocation

int* ptr = malloc(100 * sizeof(int));In this example, memory is allocated at runtime, and the pointer ptr points to the starting address of the allocated memory. The size of the allocation is determined at runtime, which is why it's considered dynamic.

The Relationship Between Compile-Time and Runtime Allocation

While memory allocation is often associated with runtime, compile-time allocation plays a significant role in determining the memory layout of the program. The executable file contains the memory addresses for static allocations, and the runtime environment uses this information to set up the program's memory space.

Executable Size and Static Allocation

If you declare a statically allocated variable, such as int array[100];the size of the executable will indeed increase by the size of the array. This is because the compiler reserves space for the array in the executable file, either in the data or BSS section.

Memory Initialization

Static variables in the data section are initialized with their specified values, while those in the BSS section are zero-initialized by the OS at program startup. This initialization is part of the program's loading process and occurs before the main() function is called.

Visualizing Memory Layout

To better understand the memory layout, consider the following diagram:

Text Section: Contains the machine code instructions.

Data Section: Contains global and static variables with initial values.

BSS Section: Contains global and static variables without initial values (zero-initialized at runtime).

Conclusion

The phrase "memory allocated at compile time" refers to the process where the compiler reserves memory for variables and data structures during the compilation phase. This memory is allocated in specific sections of the executable file (data or BSS) and is associated with virtual addresses that are translated to physical addresses at runtime.

Understanding compile-time memory allocation is essential for grasping the fundamentals of memory management in C and C++. It provides insights into how programs are laid out in memory and how they are initialized at startup.

Experimenting with Disassembly

To deepen your understanding, try compiling simple C or C++ programs and examining the generated assembly code. Tools like gcc -S (for assembly output) and online disassembly tools can be very helpful.

Final Thoughts

Memory allocation at compile time is a fundamental concept that underpins the behavior of C and C++ programs. By understanding how the compiler allocates and initializes memory, you can write more efficient, predictable, and maintainable code. So, the next time you hear "memory allocated at compile time," you'll know exactly what it means!

Great article is there a seperate data section or both BSS and non BSS collectively known as data section

Great article! Just found one little issue. The sentence: "If you declare a statically allocated variable, such as int array[100];the size of the executable will indeed increase by the size of the array" is not entirely correct as far as I know. The size of the executable will increase only by a few bytes because it will only store the size of the array in the executable but when system loads the executable its RAM image will indeed be larger by the size of the array because it will read the size from BSS segment and allocate that much additional memory for the process.